AI Upheaval: Did Poor Governance Kick Sam Altman Out of OpenAI? Nobody Saw it Coming

Shall we rethink the vendor dependency risk in AI and the future of AI governance

We had not planned to write another post about OpenAI. But last Friday Sam Altman was fired by OpenAI’s board, and Greg Brockman decided to leave after being removed from his chairman position. Three senior researchers followed suit shortly after.

The board is concerned that Sam Altman “was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities.”

Perhaps after the Google Meet calls with Altman and Brockman, the board patted their shoulders and thought that they had acted in the interest of the nonprofit's mission. The problems are:

Altman is hitting a home run: He is the de facto face of this AI wave, epitomized by ChatGPT and more recently the DevDay;

Altman and Brockman are both co-founders: A co-founder fight is a nightmare for any start-ups;

This board isn’t set-up to answer to investors, and certainly did not: Microsoft, which invested $13B into OpenAI and holding 49% of the shares in the for-profit subsidiary, wasn’t aware of the matter until a few minutes prior to the announcement; and,

The action has wrecked the credibility and reputation of the board itself in front of the public and its own employees.

They threw the baby out with the bathwater, and it backfired to make the future of OpenAI uncertain, even if they bring back Sam Altman.

What It Means for the Ecosystem

1. Obsolete Product Function vs. Vendor Dependency Risk

Do you prefer building a state-of-art product with GPT-4’s advanced capability, a worse product that can be reasonably accommodated by other models, or a combination of both?

It usually makes sense for most to pick the first option, despite the bashing of “GPT-wrappers”. GPT-4 hallucinates’ 24% less than Google’s PaLM, and the price OpenAI charges for GPT-4 is ~2% of the actual cost to run the machines on Azure Cloud, assuming the cost is at 1 year reserved instance retail price. The best open-source foundational model out there, Llama 2, couldn’t perform some of the tasks that GPT-4 can and is at least 2x more expensive to host by one self.

There are two things techies are good at grabbing: free food and good deals. Some sources tabulated that at least 1,000 companies are relying on OpenAI’s API service. The openai python package was downloaded over 11 million times in October 2023.

It’s all nice and cozy as long as OpenAI remains reliable. People felt safe enough that someone even suggested treating OpenAI as a 24/365 utilities service. But absence of evidence is not evidence of absence, as Nassim Taleb pointed out. Nobody, besides those at OpenAI, would be foolish enough to bet $1 on Sam’s departure at DevDay. Yet he was fired two weeks later (and might return). In a likely similar fashion, the size of single vendor risk is unpredictable until it is realized.

Given the abrupt chaos surrounding OpenAI’s governance, investors and creators need to assess the tail risk of single-vendor dependency a start-up is taking with GPT-4. There are quite a few great companies working on interoperability between models, for example Martian that is mentioned below in the deals section.

It seems lower to set up a multi-LLM environment initially, but might cost more to switch from single-LLM to multi-LLM. The model switching cost breakdown:

Model: Medium

Solutions in the space: Replicate, Huggingface, MLFlow Gateway

Inference framework/Agents: Low (Likely only involves swapping endpoints)

Solutions: Langchain, AutoGPT, LlamaIndex

Prompts: High (Different model requires different prompts)

Tools that LLM accesses: Medium to High

Cost depending on whether the tools integrate with the inference framework, or the model provider (illustrated below)

2. Corporate Governance of OpenAI and where the board is on the spectrum of Safety vs Profitability, are important topics for investors going forward

Being conservative versus aggressive in the development direction of a company is a tale as old as time for many companies and has been the key reason for founding teams to part ways in many instances. However, this case is a bit different with OpenAI because the last time we had such a transformative technology with the capability to create chaos and existential risks for humanity might have been the creation of the internet. So, the fallout between the company’s safety concerns and profitability is significant here.

People have been speculating that Altman and Brockman's departure might be due to internal board disagreements over the company's profit direction, with the speed of development and risk concerns possibly at odds. Others suspect internal politics and potential safety concerns. Other opinions argue about Altman's pursuit of AI chips.

However, we are not here to guess exactly what happened before the firing. Regardless of the reason, the impact of the turmoil caused by this event will be felt by OpenAI's investors, users, and partners in the long term. To understand why it happened in the first place, we might want to dive into OpenAI's company structure.

OpenAI has a fairly unusual structure, with much of the power concentrated in the Board of Directors. This board governs the nonprofit arm, as well as OpenAI GP LLC, which controls the for-profit aspect of the company. The investors and employees cannot steer the fate of the company, even though they possess material interest in the company.

Hanhan: “As a philosophical question, I doubt our ability as a species to act in the best interest of others devoid of personal incentives. Given that predisposition, it’s absurd to have outsiders gaining significant control over an entity, which seems to be the case for the ousting of Sam Altman (1 inside director vs. 3 outside directors).”

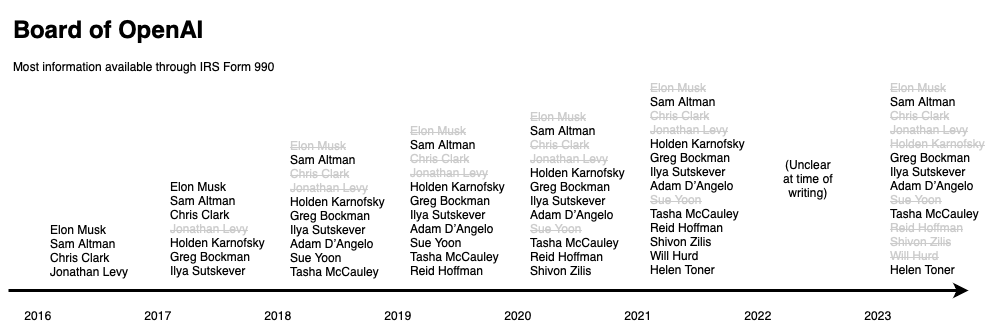

We have also noticed the board's size diminishing over the years, shrinking to six members at the time of the firing.

The latest board that fired Altman is heavily focused on AI regulation and government involvement. Two of its members, Helen Toner and Tash McCauley, both serve on the Advisory Board for the 2023 UK Center for the Governance of AI.

The other member Adam D’Angelo, the CEO of Quora, which is developing Poe, an AI chat platform (or the end goal: web blowser of AI), seems to have competition with OpenAI. We wonder if he should be on the board considering this potential competition down the road.

The board is currently led by Ilya Sutskever, the central figure in AI scientific development with more of a cautious optimism. However, the decreasing size of the board suggests that OpenAI might be losing its balance between the safety-focused scientific side (a common priority in AI foundation companies), the business side, and the individuals capable of bridging these two areas. Moving forward, we expect OpenAI's investors to closely monitor several aspects:

The composition of the board, the unique structure of the company, and the power dynamics between the nonprofit and for-profit sectors.

The balance of interests and perspectives represented on the board.

Potential competition among board members.

We are eagerly anticipating seeing how the situation at OpenAI unfolds. Despite the current challenges, we remain optimistic about the company's ability to navigate through these complexities and continue focusing on its groundbreaking research and innovative products. We anticipate that more companies will start exploring the possibility of developing and utilizing open-source APIs or those from competitors. This approach could serve as a backup and leverage in case similar situations arise in the future. The idea is to ensure continuity and flexibility in their operations, recognizing the volatile nature of the industry and the importance of having diverse technological resources at their disposal.

Additionally, the unique structure and board composition of OpenAI will remain a focal point of interest as numerous companies and investors seek to strike a balance between innovation, safety, and profitability. The way OpenAI responds to these challenges may set a precedent for future endeavors in the field, potentially influencing the broader landscape of AI development and governance.

🔥 AI Deals of the Week

Martian Emerged from Stealth and Raised $9M in Seed

Keywords: LLM Deployment, Optimization

Martian has developed an LLM Model Router which routes LLM queries to different models best suited for operation. It received the funding co-led by NEA and Prosus Ventures, with participation from Carya Venture Partners, and General Catalyst.

Polimorphic Raised $5.6M in Seed to Build AI for Government

Keywords: Government, Process Automation

Polimorphic provides automation for local governments to automate services for constituent requests, processes, and payments. There are 30 governments actively using the platform. The round is led by M13 with participation from Pear and Shine Capital.

Kyutai, a French Open-Source AI Lab, is Launched on Friday

Keywords: Open-Source, Non-profit

Scientists from Meta’s AI research team, Google’s DeepMind division, Inria and other places have joined the lab. The €300M funding comes from Xavier Niel, Rodolphe Saadé, Eric Schmidt’s foundation, and other donors.

HockeyStack Raised a $2.7M Seed Round to Help B2B Companies with Marketing Analytics

Keywords: Marketing, Analytics

HockeyStack provides marketing analytics and AI modeling to scale marketing efforts with insight. General Catalyst, YCombinator, Soma Capital and others provided the funding.

OpsLab Secured $5M in Seed to Optimize Ops Planning

Keywords: Operations, Planning, Dispatch

OpsLab provides smart assistant to help airlines, USAF, and road transport improve dispatching efficiency. Cultivation Capital led the round with participation from Storm Ventures.

Artisan Raised $2.3M in Pre-Seed with Human-Like AI Digital Workers

Keywords: Sales, End-to-End

Artisan creates human-like AI workers that integrates with human teams. It intends to improve the AI workers’ performance through constant data collection and updates. The funding comes from YCombinator, Bayhouse Capital and Oliver Jung.

Lynx Raised €17M in Series A to Combat Financial Fraud

Keywords: Fraud Detection

Lynx uses behavioral data to provide real-time fraud detection for financial institutions. With a strong foothold in Brazil, its users include Cielo and Banco Santander. Forgepoint Capital led the round with participation from Banco Santander.

BlastPoint Raised $8M+ to Help Companies Target Customers Better

Keywords: Marketing, Customer Acquisition, Analytics

BlastPoint provides customer analytics and helps companies increase customer engagement through data insight in highly regulated industries. Curql provided the funding.

Fractl Raises $1.025M in Pre-Seed to Build Low-Code Declarative Language that Blends AI Code-Generation

Keywords: Code Generation, Programming, Low-Code

Fractl builds a low-code open-source language that provides business-logic-level abstraction with declarative syntax. It intends to use AI to help developers focus more on the business logic than dealing with the code implementation. WestWave Capital led the round with participations from January Capital and Arka Venture Labs.

Atlas Raised $6M in Two Rounds with AI 3D Model Assets Generation

Keywords: Gaming, 3D Models

Atlas uses AI to generate 3D assets used in games and other 3D contents. It has partnered some of the biggest AAA studios. The $4.5M round is led by 6th Man Ventures, and the $1.5M round is led by Collab+Currency.

Haut.AI Raised €2M in Seed for Customized Skincare AI

Keywords: Beauty, Skincare, Image Classification

Haut.AI provides skin condition early detection, diagnosis, and personalized care. It has partnered with brands including Beiersdorf and Ulta Beauty for them to provide personalized product suggestions in-store and online. LongeVC and the VC arm of Grupo Boticário shareholders provided the funding.

Please leave a comment below 💬 with your thoughts!